Writing Tests

Previously, we learned the basic concepts behind Touca and how we can use the Touca CLI to capture the output of a given software to detect changes in its behavior. In this Tutorial, we'll use Touca SDKs to find software regressions by capturing the values of variables and runtime of functions.

Let's suppose we're building a software that takes the username of a student and provides basic information about them.

- Python

- C++

- JavaScript

- Java

def find_student(username: str) -> Student:

Student find_student(const std::string& username);

async function find_student(username: string): Promise<Student>;

public static Student findStudent(final String username);

Where type Student could be defined as follows:

- Python

- C++

- JavaScript

- Java

@dataclass

class Student:

username: str

fullname: str

dob: datetime.date

gpa: float

struct Student {

std::string username;

std::string fullname;

Date dob;

float gpa;

};

interface Student {

username: string;

fullname: string;

dob: Date;

gpa: number;

}

public final class Student {

public String username;

public String fullname;

public LocalDate dob;

public double gpa;

}

You can find the source code for this example on

our GitHub under the

examples/<lang>/02_<lang>_main_api directory. If you like to follow along,

this is a great time to clone the repository:

git clone git@github.com:trytouca/trytouca.git

The students module represents our code under test which could be arbitrarily

complex. It may call various nested functions, perform database lookups, and

access other services to retrieve the requested information. Here's a simple

implementation to start with:

- Python

- C++

- JavaScript

- Java

def find_student(username: str) -> Student:

sleep(0.2)

data = next((k for k in students if k[0] == username), None)

if not data:

raise ValueError(f"no student found for username: ${username}")

return Student(data[0], data[1], data[2], calculate_gpa(data[3]))

Student find_student(const std::string& username) {

std::this_thread::sleep_for(std::chrono::milliseconds(200));

if (!students.count(username)) {

throw std::invalid_argument("no student found for username: " + username);

}

const auto& student = students.at(username);

return {

student.username,

student.fullname,

student.dob,

calculate_gpa(student.courses)

};

}

export async function find_student(username: string): Promise<Student> {

await new Promise((v) => setTimeout(v, 200));

const data = students.find((v) => v.username === username);

if (!data) {

throw new Error(`no student found for username: ${username}`);

}

const { courses, ...student } = data;

return { ...student, gpa: calculate_gpa(courses) };

}

public static Student findStudent(final String username) {

Thread.sleep(200);

for (Student student : Students.students) {

if (student.username.equals(username)) {

return new Student(data.username, data.fullname, data.dob,

calculateGPA(data.courses));

}

}

throw new NoSuchElementException(

String.format("No student found for username: %s", username));

}

We can use unit testing in which we hard-code a set of input numbers and list our expected return value for each input.

- Python

- C++

- JavaScript

- Java

from code_under_test import find_student

def test_find_student():

alice = find_student("alice")

assert alice.fullname == "Alice Anderson"

assert alice.dob == date(2006, 3, 1)

assert alice.gpa == 3.9

#include "catch2/catch.hpp"

#include "code_under_test.hpp"

TEST_CASE("test_find_student") {

const auto& alice = find_student("alice");

CHECK(alice.fullname == "Alice Anderson");

CHECK(alice.dob == Date{2006, 3, 1});

CHECK(alice.gpa == 3.9);

}

import { find_student } from "code_under_test";

test("test_find_student", () => {

const alice = find_student("alice");

expect(alice.fullname).toEqual("Alice Anderson");

expect(alice.dob).toEqual({2006, 3, 1});

expect(alice.gpa).toEqual(3.9);

});

import org.junit.jupiter.api.Test;

import static org.junit.jupiter.api.Assertions.assertEquals;

public final class StudentsTest {

@Test

public void isPrime() {

final Student alice = Students.findStudent("alice");

assertEquals(alice.fullname, "Alice Anderson");

assertEquals(alice.dob, LocalDate.of(2006, 3, 1));

assertEquals(alice.gpa, 3.9);

}

}

Unit tests require calling our code under test with a hard-coded set of inputs and comparing the return value of our function against a hard-coded set of expected values. We would need a separate block of assertions for every input to our code under test. This could be prohibitive for workflows that need to handle a variety of different inputs.

Touca takes a different approach:

- Python

- C++

- JavaScript

- Java

import touca

from students import find_student

@touca.workflow

def students_test(username: str):

student = find_student(username)

touca.assume("username", student.username)

touca.check("fullname", student.fullname)

touca.check("birth_date", student.dob)

touca.check("gpa", student.gpa)

#include "students.hpp"

#include "students_types.hpp"

#include "touca/touca.hpp"

int main(int argc, char* argv[]) {

touca::workflow("find_student", [](const std::string& username) {

const auto& student = find_student(username);

touca::assume("username", student.username);

touca::check("fullname", student.fullname);

touca::check("birth_date", student.dob);

touca::check("gpa", student.gpa);

});

return touca::run(argc, argv);

}

import { touca } from "@touca/node";

import { find_student } from "./students";

touca.workflow("students_test", async (username: string) => {

const student = await find_student(username);

touca.assume("username", student.username);

touca.check("fullname", student.fullname);

touca.check("birth_date", student.dob);

touca.check("gpa", student.gpa);

});

touca.run();

import io.touca.Touca;

public final class StudentsTest {

@Touca.Workflow

public void findStudent(final String username) {

Student student = Students.findStudent(username);

Touca.assume("username", student.username);

Touca.check("fullname", student.fullname);

Touca.check("birth_date", student.dob);

Touca.check("gpa", student.gpa);

}

public static void main(String[] args) {

Touca.run(StudentsTest.class, args);

}

}

Unlike unit tests:

- Touca tests do not use expected values.

- Touca test inputs are decoupled from the test code.

Similar to property-based testing, Touca test workflows take their input as a parameter. For each test input, we can call our code under test and use Touca SDKs data capturing functions to describe its behavior and performance by capturing values of interesting variables and runtime of important functions. Touca will notify us if this description changes in a future version of our software.

There is more that we can cover but let us accept the above code snippet as the first version of our Touca test code and prepare for running the test.

- Python

- C++

- JavaScript

- Java

cd trytouca/examples/python/02_python_main_api

pip install touca

cd trytouca/examples/cpp/02_cpp_main_api

../build.sh

The build script uses CMake to pull Touca C++ SDK as a dependency and writes the

build artifacts into the ./local/dist/bin directory.

cd trytouca/examples/js/02_node_main_api

npm install

npm run build

cd trytouca/examples/java

./gradlew build

Now we can set our API credentials,

- Using CLI Login

- Using CLI Config

- Using Environment Variables

touca login

See touca login to learn more.

touca config set api-key="<your-api-key>"

touca config set api-url="<your-api-url>"

See touca config to learn more.

export TOUCA_API_KEY="<your-api-key>"

export TOUCA_API_URL="<your-api-url>"

See here to learn more.

And run our tests with any number of inputs from the command-line:

- Python

- C++

- JavaScript

- Java

touca test --testcase alice bob charlie

./local/dist/bin/example_cpp_main_api --testcase alice,bob,charlie

node 02_node_main_api/dist/students_test.js --testcase alice bob charlie

gradle runExampleMain --args='--testcase alice bob charlie'

In real-world scenarios, our test cases may be too many to list as command-line arguments. Touca SDKs allow you to programmatically declare your test cases for each workflow. See Setting Test Cases to see how.

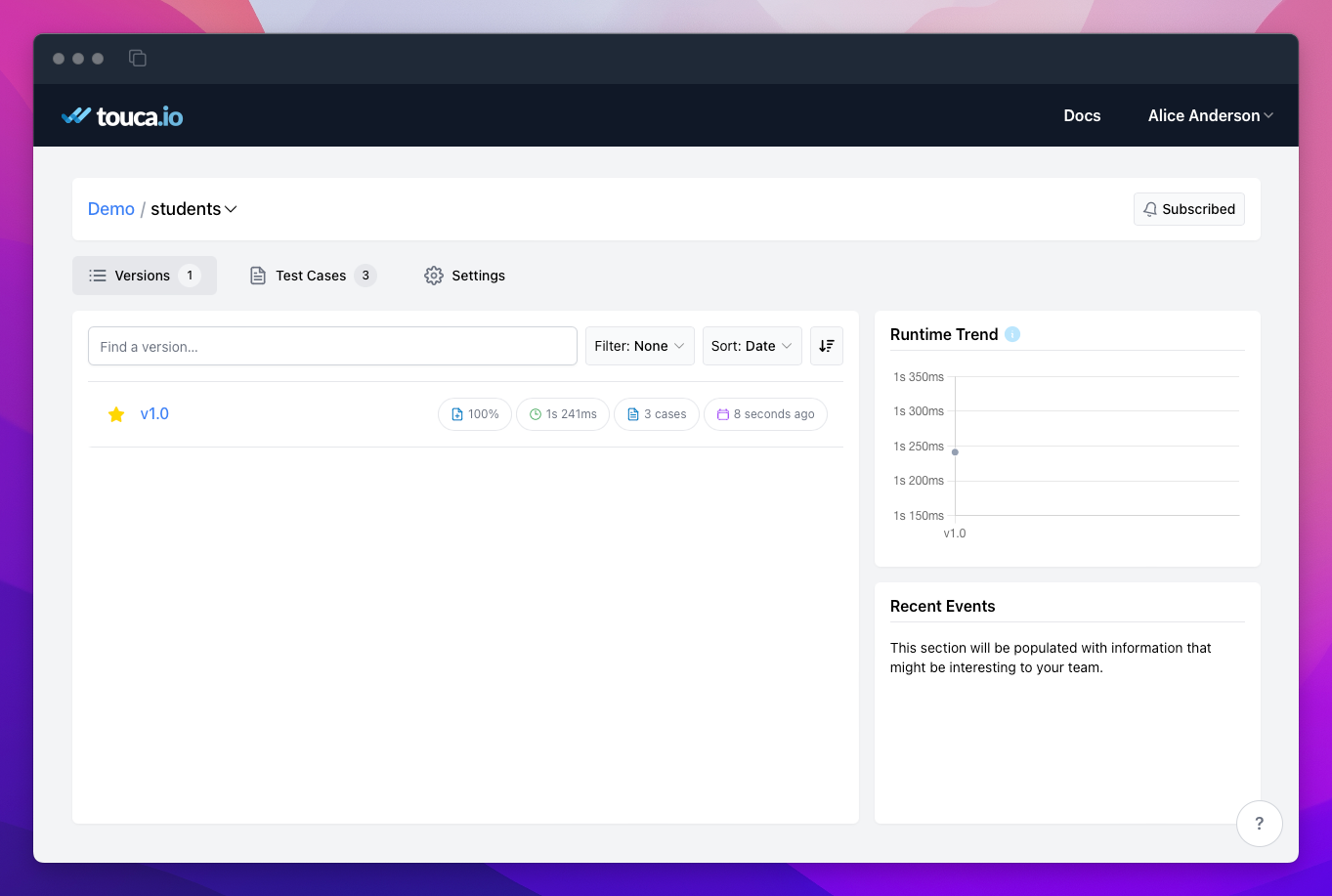

The above command produces the following output.

We can see our captured data points submitted to the server.

If this was your first ever Touca test, cheers to many more! 🥂