Capturing Test Results

In the previous tutorial, we reviewed the main Touca functions for describing the behavior and performance of our code under test, by capturing values of important variables and runtime of interesting functions. In this section, we dive a little deeper to explain how Touca tracks values of variables and performance benchmarks.

Preserving Data Types

Touca data capturing functions such as check, preserve the types of all

captured data so that the Touca server can compare them in their original type.

- Python

- C++

- JavaScript

- Java

touca.check("username", student.username)

touca.check("fullname", student.fullname)

touca.check("birth_date", student.dob)

touca.check("gpa", student.gpa)

In the example above, touca.check stores value of properties username and

fullname as string while properties dob and gpa are stored as

datetime.date and float respectively. The server visualizes possible

differences in these values based on their types.

The SDK is designed to handle iterables and custom objects by serializing their

elements and properties. This makes it possible for us to add object student

as a single entity, if we so choose.

touca::check("username", student.username);

touca::check("fullname", student.fullname);

touca::check("gpa", student.gpa);

In the example above, touca::check stores value of properties username and

fullname as std::string while property gpa is stored as float. The

server visualizes possible differences in these values based on their types.

touca.check("username", student.username);

touca.check("fullname", student.fullname);

touca.check("birth_date", student.dob);

touca.check("gpa", student.gpa);

In the example above, touca.check stores value of properties username and

fullname as string while properties dob and gpa are stored as Date and

number respectively. The server visualizes possible differences in these

values based on their types.

The SDK is designed to handle iterables and custom objects by serializing their

elements and properties. This makes it possible for us to add object student

as a single entity, if we so choose.

Touca.check("username", student.username);

Touca.check("fullname", student.fullname);

Touca.check("birth_date", student.dob);

Touca.check("gpa", student.gpa);

In the example above, Touca.check stores value of properties username and

fullname as String while properties dob and gpa are stored as

java.time.LocalDate and double respectively. The server visualizes possible

differences in these values based on their types.

The SDK is designed to handle iterables and custom objects by serializing their

elements and properties. This makes it possible for us to add object student

as a single entity, if we so choose.

Customizing Data Serialization

- Python

- C++

- JavaScript

- Java

While Touca data capturing functions automatically support objects and custom types, it is possible to override the serialization logic for any given non-primitive data type.

Consider the following definition for a custom class Course.

@dataclass

class Course:

name: str

grade: float

By default, the SDK serializes objects of this class using by serializing all of

its public properties. This behavior results in object Course("math", 3.9) to

be serialized as {name: "math", grade: 3.9}. We can use touca.add_serializer

to override this default behavior. The following code results in the same object

to be serialized as ["math", 3.9]:

touca.add_serializer(Course, lambda x: [x.name, x.grade])

for course in student.courses:

touca.add_array_element("courses", course)

touca.add_hit_count("number of courses")

While our serializer changed the way Course data is serialized and visualized,

it still preserved both properties of this object. If we like to exclude the

property name during serialization and limit the comparison to grade, we

could use (x: Course) => x.grade instead

Touca C++ SDK has built-in support for many commonly-used types of the standard library and can be extended to support custom data types.

Consider the following definition for a user-defined type Date.

struct Date {

unsigned short year;

unsigned short month;

unsigned short day;

};

Using the natively supported types, we can add a value of type Date as three

separate test results that each cover individual member variables. But this

practice is cumbersome and impractical for real-world complex data types. To

solve this, we can extend Touca type system to support type Date using partial

template specialization.

#include "touca/touca.hpp"

template <>

struct touca::serializer<Date> {

data_point serialize(const Date& value) {

return object("Date")

.add("year", value.year)

.add("month", value.month)

.add("day", value.day);

}

};

Once the client library learns how to handle a custom type, it automatically

supports handling it as sub-component of other types. As an example, with the

above-mentioned partial template specialization for type Date, we can start

adding test results of type std::vector<Date> or std::map<string, Date>.

Additionally, supporting type Date enables objects of this type to be used as

smaller components of even more complex types.

touca::check("birth_date", student.dob);

Consult with the Reference API documentation for more information and examples for supporting custom types.

Touca SDKs have native support for primitive data types such as integers and floating point numbers, characters and string, arrays and maps, and other commonly used data types. You can extend and override the built-in serialization logic for any given non-primitive data type.

Consider the following definition for a custom class Course.

export class Course {

constructor(public readonly name: string, public readonly grade: number) {}

}

By default, the SDK serializes objects of this class using by serializing all of

its public properties. This behavior results in object Course('math', 3.9) to

be serialized as {name: 'math', grade: '3.9}. We can use

touca.add_serializer to override this default behavior. The following code

results in the same object to be serialized as ['math', 3.9]:

touca.add_serializer(Course.name, (x: Course) => [x.name, x.grade]);

for (const course of student.courses) {

touca.add_array_element("courses", course);

touca.add_hit_count("number of courses");

}

While our serializer changed the way Course data is serialized and visualized,

it still preserved both properties of this object. If we like to exclude the

property name during serialization and limit the comparison to grade, we

could use (x: Course) => x.grade instead.

While Touca data capturing functions automatically support objects and custom types, it is possible to override the serialization logic for any given non-primitive data type.

Consider the following definition for a custom class Course.

public final class Course {

public String name;

public double grade;

public Course(final String name, final double grade) {

this.name = name;

this.grade = grade;

}

}

By default, the SDK serializes objects of this class using by serializing all of

its public properties. This behavior results in object Course("math", 3.9) to

be serialized as {name: "math", grade: 3.9}. We can use Touca.addTypeAdapter

to override this default behavior. The following code excludes the property

name during serialization and limits the comparison to grade:

Touca.addTypeAdapter(Course.class, course -> course.grade);

for (Course course: student.courses) {

Touca.addArrayElement("courses", course);

Touca.addHitCount("number of courses");

}

It is sufficient to register each serializer once per lifetime of the test application.

Adding Comparison Rules

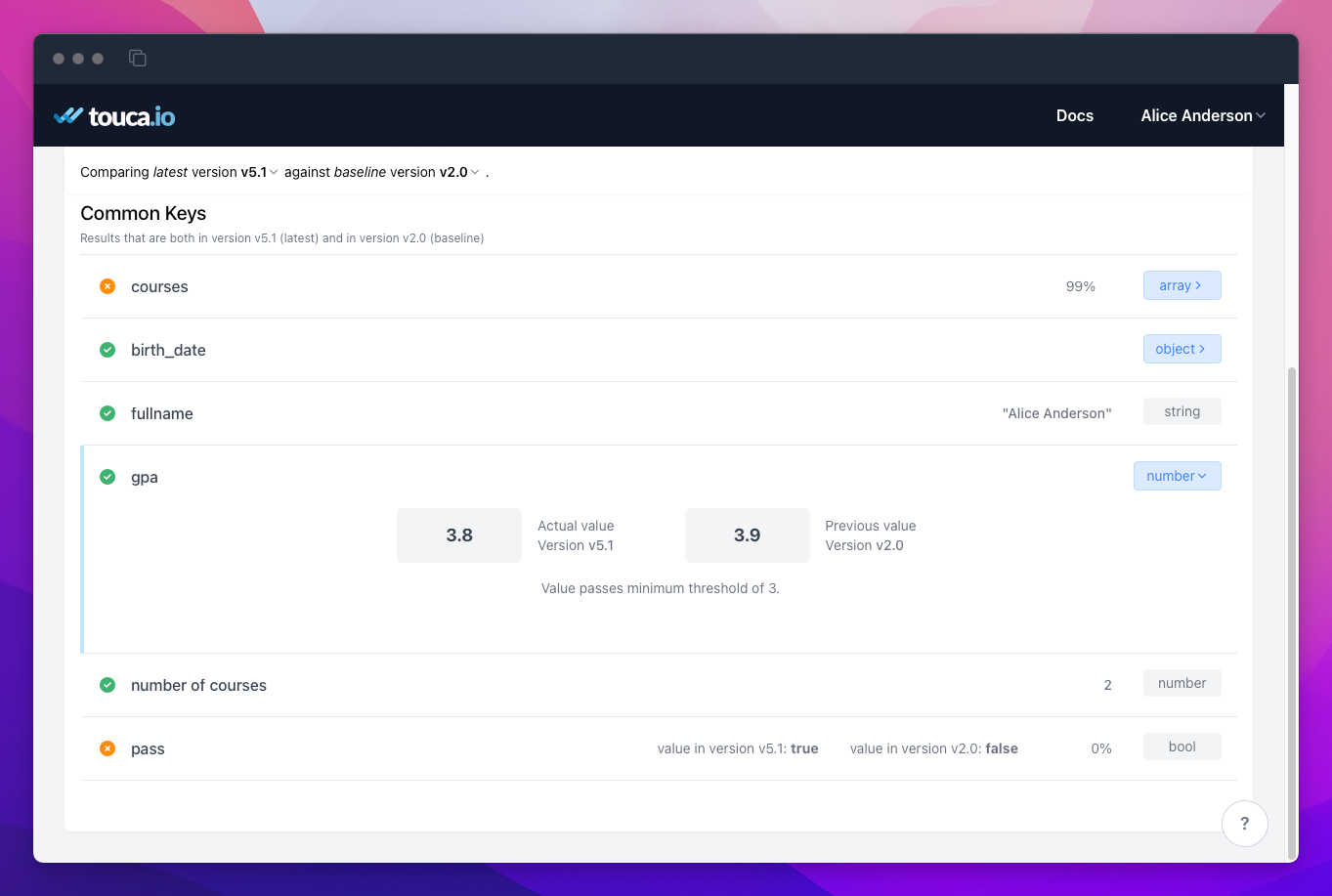

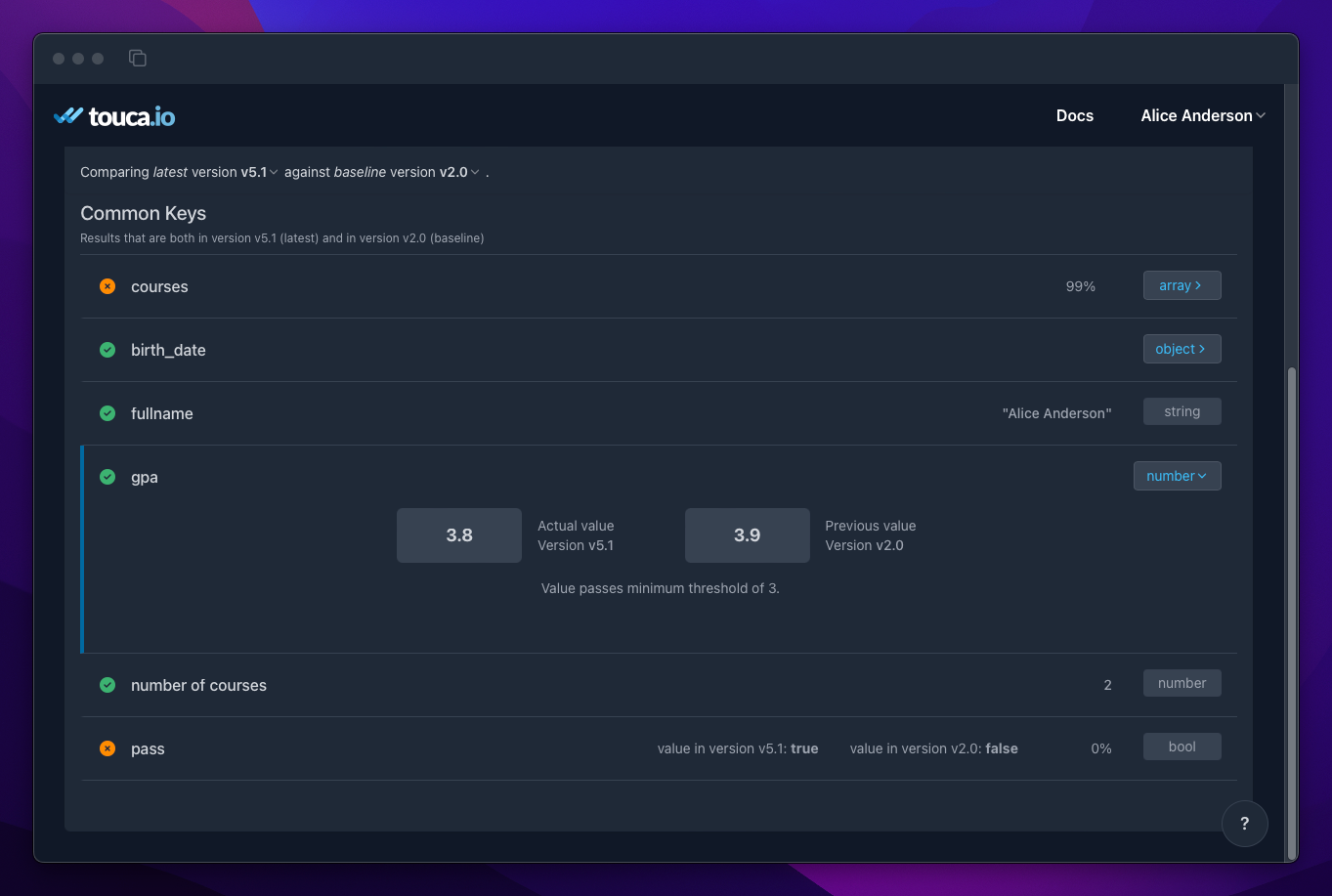

Often times, we expect the value of each data point in the actual version to be exactly the same as in the baseline version. Sometimes, we may want to capture and visualize numerical data points (e.g. the prediction score of a machine learning algorithm) that may include small deviations from the baseline version.

We can use custom comparison rules to set acceptable minimum and maximum thresholds, in absolute value or as percentage of the baseline value, that would allow capturing these data points without flagging every difference as a regression.

- Python

- C++

- JavaScript

- Java

touca.check("gpa", student.gpa, rule=touca.decimal_rule.absolute(min=3))

C++ SDK does not support this feature yet.

touca.check("gpa", student.gpa, {

rule: { type: "number", mode: "absolute", min: 3 }

});

Touca.check("gpa", student.gpa, DecimalRule.absolute(x -> {

x.setMin(3);

}));

With the Java SDK, you need to explicitly import the comparison rule that you are using.

import io.touca.rules.DecimalRule;

When the actual value falls within the threshold, the data point is treated as if it is the same as the baseline version but the server visualizes both actual and baseline values to help you see the difference.

Choosing Test Results

The effectiveness of Touca tests depends, in part, on the data we capture. We should capture enough information to help us detect changes in behavior and performance. At the same time, capturing too much data with little value may generate false positives or make it difficult to trace a discovered regression to its root cause.

Here are some general recommendations for choosing an effective set of test results:

-

Each test case corresponds to some input to the code under test. It is always helpful to capture as test assertion the essential characteristics that uniquely identify that input. Capturing this data allows us to quickly identify any change in the input to the code under test. It prevents attribution of that change to our changes to the production code.

-

We should only capture values of variables that are expected to remain consistent between different revisions. Capturing a variable that stores the current date and time or a randomly generated number is guaranteed to be flagged as regression and only adds noise.

-

Start small. We recommend that you start by capturing a limited number of important data points from different parts of our software workflow. Variables that are in the same execution branch are likely to change together.

As a general rule, a variable should be added as a separate result when it is prone to change independently. This rule may help minimize the number of tracked data points while maintaining their effectiveness in identifying potential regressions.

Capturing Internal States

It is always a good idea to avoid testing the implementation details of a given workflow under test. But there are scenarios in which you may want to capture a data point from within your code under test that is inconvenient to access from the outside. Touca SDKs can integrate with the production code to capture values of important variables and runtime of interesting functions, even if those functions are not exposed.

Here are a few best practices if you ever intend to use Touca for capturing data points from your production code:

-

As long as it is possible and convenient, it is better to reproduce our software execution workflow in the test tool and capture all or some of the information from the test tool. As an example, if the code under test provides as output a complex object with various member functions that reveal different properties of that output, it makes more sense to capture results from the test tool by explicitly calling those member functions, as opposed to adding data capturing functions to the implementation of those member functions.

-

For increased readability and easier maintenance, when capturing data from the code under test, try to maintain a physical separation between Touca function calls and the function's core logic. Even though Touca function calls are a no-op in the production environment, they are still considered test-specific logic.

-

Always assume that the function you are capturing results from is called by other Touca test tools that execute a different software workflow. In other words, in any function, only capture information that is relevant to that function and may be valuable to other test workflows that happen to execute that function.

Locally Storing Test Results

You can use configuration parameters save_binary and save_json to store the

test results captured for each testcase into local files in binary or JSON

format. This feature may be helpful for special cases such as when test tools

have to be run in environments that have no access to the Touca server (e.g.

running with no network access).

By default, any local file generated during the test run such as test result

files (in binary or json format) will be written in a .touca/results directory

that is placed in the $HOME directory unless environment variable

TOUCA_HOME_DIR is specified or that a .touca directory already exists in the

current working directory.

You can conveniently browse and manage binary archives of the results store

using the Touca CLI via the touca results command.